DeepSeek V3 is a revolutionary language model with 671 billion parameters. The model activates 37 billion parameters for each token. This Mixture-of-Experts (MoE) model shows exceptional efficiency in cost. The complete training needs 2.788 million H800 GPU hours, which costs about $5.576 million.

The model trained on 14.8 trillion tokens of various types performs exceptionally well in math and code tasks. It outperforms others in most benchmarks. The model works with AMD Instinct™ GPUs and ROCm™ software that helps developers exploit advanced features for text and visual data processing. This combination optimizes the model’s performance and efficiency, especially with FP8 support for better inference.

Understanding DeepSeek V3 Architecture

DeepSeek V3’s architecture stands on a sophisticated Mixture-of-Experts (MoE) design that sets new standards in model efficiency and performance.

Overview of 671B Parameter MoE Model

DeepSeek V3’s architecture uses just 37 billion parameters per token from its total 671 billion parameters. This smart approach helps the model process information quickly without compromising its performance. The model features a trailblazing auxiliary-loss-free strategy for load balancing that minimizes performance issues during expert use.

Exploring the Multi-head Latent Attention Mechanism in DeepSeek V3

The Multi-head Latent Attention (MLA) mechanism from DeepSeek V2 is a vital part of the architecture. This system makes attention computations better by using low-rank joint compression for attention keys and values. On top of that, it stores only compressed latent vectors, which cuts down key-value storage needs during inference operations.

Cost-Effective Training Approach

The training framework packs several innovative features that boost efficiency. The model uses:

- FP8 mixed precision training that speeds up processing and uses less GPU memory

- DualPipe algorithm that streamlines pipeline parallelism and reduces communication overhead

- Multi-token prediction training objective that improves overall performance measures

The architecture shows remarkable stability during pre-training with no irrecoverable loss spikes or rollbacks. The system optimizes cross-node communication by using InfiniBand and NVLink bandwidths effectively while keeping training efficiency high.

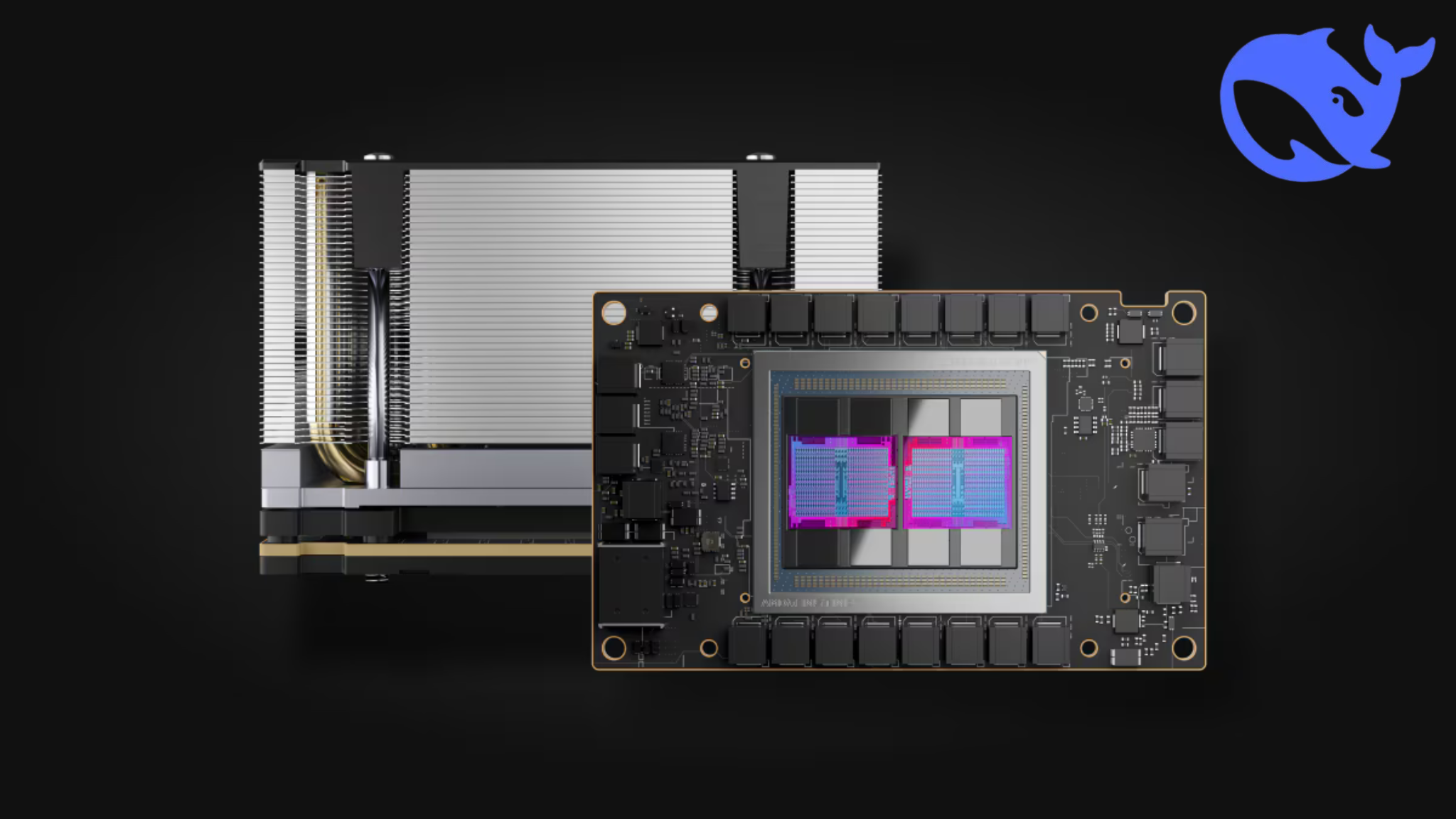

AMD Instinct GPU Implementation with DeepSeek V3

AMD has made huge strides in AI model deployment by integrating DeepSeek V3 with Instinct GPUs.

Hardware Requirements and Setup

AMD Instinct MI300X GPU powers the DeepSeek V3 implementation. This accelerator offers outstanding computing power and memory bandwidth needed to process text and visual data. Users need the Adrenalin 25.1.1 driver to get the best performance.

SGLang Framework Integration

AMD and SGLang teams worked together to create complete framework support for DeepSeek V3. SGLang brings these features to AMD GPU deployment:

- RadixAttention that runs up to 5x faster for inference

- Full compatibility with both FP8 and BF16 precision modes

- Tensor parallelism and continuous batching capabilities

Performance Optimization Techniques

ROCm platform improves model efficiency through advanced optimization strategies. The implementation tackles memory bottlenecks with extensive FP8 support, which lets larger models run within current hardware limits. The reduced precision calculations cut down data transmission delays.

CK-tile based kernels help the platform run faster. The system stays flexible by supporting both FP8 and BF16 inference modes, which lets developers pick the best precision level for their needs. This two-mode support and AMD’s open software approach helps developers create advanced visual reasoning applications while keeping performance high.

Deployment Strategies and Best Practices for DeepSeek V3

DeepSeek V3’s successful deployment needs a good grasp of precision modes, memory management, and scaling strategies.

FP8 vs BF16 Mode Selection

We mainly use DeepSeek V3 in FP8 mode, as this precision was built into the training process. Notwithstanding that, BF16 mode is available through a conversion script for specific use cases. Your deployment requirements will guide the choice between these modes. FP8 gives you less memory usage and faster processing, while BF16 delivers higher numerical precision.

Memory Management Optimization

Optimal memory usage is the life-blood of DeepSeek V3 deployment. The model uses these key optimization techniques:

- Prefilling and decoding stage separation to boost GPU load management

- FP8 KV cache implementation to reduce memory footprint

- Dynamic routing with redundant expert hosting

The framework definitely benefits from modular deployment strategies, so it adjusts computational resources based on what the workload needs. This approach makes shared integration with PyTorch-based workflows possible while keeping performance levels high.

Scaling Across Multiple GPUs

Our multi-GPU deployment aims to minimize communication overhead between nodes. The DualPipe algorithm creates near-zero all-to-all communication overhead, which helps scale things quickly. GPU consolidation within single nodes works better than spreading them across machines when deploying on multiple nodes.

The vLLM framework (v0.6.6) supports both pipeline and tensor parallelism, which enables distributed deployment across networked machines. The system uses specialized communication kernels to maximize bandwidth usage between nodes for optimal throughput. You’ll find the framework’s flexible architecture supports various precision modes across both NVIDIA and AMD hardware platforms, which ensures broad compatibility for large-scale deployments.

DeepSeek V3: Performance Benchmarks and Analysis

DeepSeek V3’s performance metrics show remarkable efficiency in both training and inference operations for benchmarks of all types.

Comparison with NVIDIA H800

The model completed its training using 2,048 H800 GPUs and needed just 2.788 million GPU hours. The training cost came to $5.58 million, which beats traditional approaches. We optimized GPU utilization through specialized communication kernels and pipeline parallelism.

Math and Code Task Performance

DeepSeek V3 excels at specialized tasks:

- Coding Excellence: Reached 51.6% accuracy on Codeforces, beating GPT-4o and Claude 3 by a wide margin

- Mathematical Reasoning: Beat o1-preview on MATH-500 measure

- Polyglot Performance: Achieved 48.5% accuracy while Claude Sonnet 3.5 reached 45.3%

The largest longitudinal study shows that DeepSeek V3 beats Llama 3.1 405B and Qwen 2.5 72B consistently on benchmarks of all types. These gains come from the model’s specialized training on coding and mathematical datasets.

Latency and Throughput Metrics for DeepSeek V3

DeepSeek V3 delivers an output speed of 12.0 tokens per second with a first-token latency of 0.97 seconds. The model works consistently well with context windows up to 128K tokens. The system needs 32 GPUs for prefill inference and 320 GPUs for decoding inference as its minimum deployment setup.

The system’s FP8 support handles memory bottlenecks and high latency problems effectively. This optimization ended up allowing larger models and batches to run within existing hardware limits, which creates better training and inference processes.

Conclusion

DeepSeek V3 represents a major breakthrough in language model development. Its innovative design combines an efficient architecture with exceptional performance. The model uses just 37 billion parameters per token but can access its full 671 billion parameter capacity thanks to its MoE architecture.

AMD Instinct GPUs make the model even more powerful. Specialized features like FP8 support and RadixAttention acceleration boost inference speeds up to 5 times faster. Users can choose between different precision modes to match their needs.

The numbers speak for themselves. DeepSeek V3 performs better than GPT-4 and Claude 3 in specific areas. It achieves 51.6% accuracy on Codeforces and sets new standards for mathematical reasoning. The training process is remarkably efficient – it took only 2.788 million GPU hours and cost $5.576 million.

DeepSeek V3’s architecture sets new standards for deploying large AI models. Smart memory management and efficient scaling capabilities make this model work well in many different tasks. These improvements will definitely lead to more capable AI systems as the technology evolves.