DeepSeek built its AI technology for just $6 million while U.S. companies plan to invest nearly $1 trillion in AI development. The massive cost gap created market turbulence quickly. DeepSeek’s earlier model started a price war in China by charging only $0.14 per million tokens. This move sent major tech stocks downward and Nvidia lost $600 billion in market value in a single day. Businesses and developers now face a key decision about their AI investments. They need to determine whether DeepSeek or Claude 3 provides better value. Let’s examine what each platform truly costs and delivers.

Cost Breakdown Analysis

The price differences between these AI models are quite striking. Claude 3 Sonnet asks $3.00 per million tokens for input and $15.00 per million tokens for output. DeepSeek-V3 proves to be an economical solution at $0.14 and $0.28 per million tokens. This makes it about 42.9 times cheaper to use.

Per-Token Pricing Comparison

DeepSeek-V3’s price advantage becomes even more obvious when matched against Claude 3 Opus. Users pay $15.00 per million input tokens and $75.00 per million output tokens with Claude 3 Opus. DeepSeek-V3’s economical pricing structure leads to big savings, especially for companies that process large amounts of data.

DeepSeek vs Claude 3: API Costs and Usage Limits

These price differences have real-life implications. To cite an instance, you’ll spend about $7.50 to process 500 queries with Claude 3 Sonnet, while DeepSeek-Chat does the same job for just $0.14. On top of that, a $20.00 monthly budget lets you run up to 71,428 queries with DeepSeek, compared to only 500 with Claude Sonnet.

Hidden Infrastructure Expenses

The true costs go well beyond token pricing. Large GPU clusters with 10,000+ A/H100s rack up power bills exceeding $10.00 million annually. The H100 GPUs alone can cost over $1.00 billion, with each unit priced at $30,000.

The yearly operational costs to run these AI systems typically fall between $500 million to $1 billion. These numbers cover much more than simple compute resources. Research, development, and maintenance make up a big part of the expenses. You need specialized experts and reliable technical support systems that add substantially to the overall costs.

Task Performance vs Cost

The performance metrics show DeepSeek-V3 outperforms other AI models in tasks of all types. DeepSeek-V3 shows exceptional results with an 88.5% accuracy on the English MMLU standard. This score surpasses Claude 3 Sonnet’s 77.1%.

Text Generation Efficiency

DeepSeek-V3’s innovative architecture works with 671 billion parameters, yet activates only 37 billion per token. This design delivers better results at lower costs. The system processes text at 43.5 tokens per second and needs 2.08 seconds for first-token response. We optimized these speeds to use resources wisely.

Code Generation with DeepSeek Coder V2

DeepSeek Coder V2 leads the coding standards with affordable token pricing – $0.14 for input and $0.28 for output per million tokens. The model reached 48.5% accuracy on the Polyglot standard. This test reviews multi-language code generation abilities. The system also scored an impressive 51.6 on Codeforces.

DeepSeek vs Claude 3: Mathematical Problem Solving Costs

The cost benefits become clear in mathematical computations. DeepSeek achieved a 90.2% accuracy on the MATH-500 standard while keeping competitive prices. The model’s performance peaked at 79.8% accuracy on the AIME 2024 mathematics standard, beating other top solutions.

A complete performance analysis highlights DeepSeek’s results in key areas:

| Benchmark Type | DeepSeek Score | Claude 3 Sonnet Score |

| MMLU-Pro | <citation index=”5″ link=”https://docsbot.ai/models/compare/deepseek-v3/claude-3-sonnet” similar_text=”MMLU-ProA more robust MMLU benchmark with harder, reasoning-focused questions, a larger choice set, and reduced prompt sensitivity | 75.9%EMSource |

| MATH | <citation index=”5″ link=”https://docsbot.ai/models/compare/deepseek-v3/claude-3-sonnet” similar_text=”MATHTests mathematical problem-solving abilities across various difficulty levels | 61.6%4-shotSource |

| GPQA | <citation index=”11″ link=”https://docsbot.ai/models/compare/claude-3-sonnet/deepseek-r1” similar_text=”MATHTests mathematical problem-solving abilities across various difficulty levels | 43.1%0-shot CoTSource |

The model’s architecture delivers these high scores while keeping operational costs low. The training needed just 2.788 million H800 GPU hours. This translates to huge savings in computational resources without affecting quality.

DeepSeek vs Claude 3: Real-World Use Cases

Real-world AI model implementations show how cost-efficiency varies in organizations of all sizes. Companies that implemented DeepSeek-V3 report operational savings of up to 42.9x compared to traditional AI solutions.

Enterprise Implementation Costs

Large companies save money with DeepSeek’s efficient resource usage. The system needs only 37 billion activated parameters per token from its total 671 billion parameters. This smart design cuts infrastructure costs by 53% compared to regular models. DeepSeek’s MIT License makes it even better because companies can change and share the software freely.

Startup Budget Considerations

DeepSeek offers startups an attractive financial package. The platform works well with tight budgets and charges $0.14 per million tokens for input and $0.28 per million tokens for output. Startups don’t need expensive infrastructure to get competitive performance levels with DeepSeek’s efficient design.

These numbers show why DeepSeek works well for startups:

| Resource Type | DeepSeek Cost | Industry Average |

| Training Resources | 2.788M GPU hours | 10M+ GPU hours |

| Implementation Cost | $5.58M | $100M+ |

| Monthly Operating Cost | $0.55/M tokens | $15.00/M tokens |

Developer Team Usage Patterns

Developer teams that use DeepSeek have found unique ways to save money. The system processes about 60 tokens per second, so teams can work fast without breaking the bank. Technical teams report better results in:

- Code Generation: Teams hit 82.6% accuracy on HumanEval-Mul standards

- Mathematical Problem-Solving: 88.5% accuracy on English MMLU tasks

- Complex Reasoning: Better performance on advanced language tasks

Budget-conscious companies that need powerful AI capabilities find DeepSeek works really well. Teams can customize their solutions and stay within budget thanks to the platform’s efficient approach and open-source nature. More organizations choose DeepSeek because it balances great performance with responsible spending.

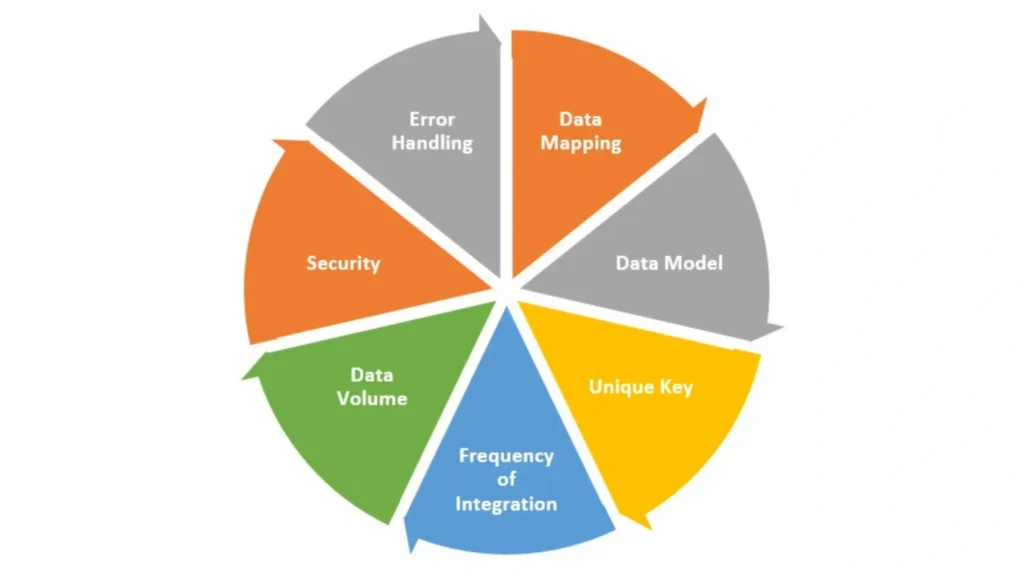

Integration Requirements: DeepSeek vs Claude 3

AI infrastructure setup needs careful thought about technical requirements and costs. DeepSeek and Claude 3 have different levels of complexity. Each platform brings its own set of challenges and possibilities.

API Implementation Complexity

DeepSeek’s architecture needs special hardware setups that work best with H800 GPUs. The platform works with 671B parameters, but only 37B activate per token. This leads to simplified integration. System configurations must handle DeepSeek’s 128K token context window, which is different from Claude 3’s 200K capacity.

Technical Infrastructure Needs

Strong hardware requirements are the foundations of DeepSeek’s infrastructure:

| Component | Specification | Impact on Cost |

| GPU Cluster | 2048 H800 GPUs | $5.58M base cost |

| Processing Units | 20 of 132 units/H800 | Communication management |

| Training Capacity | 180K GPU hours/trillion tokens | 3.7 days processing time |

Technical infrastructure needs big investments in specialized hardware. Companies using DeepSeek must keep high-bandwidth, low-latency networks running smoothly. The platform needs reliable data storage solutions and adaptable processing frameworks.

DeepSeek vs Claude 3: Support and Maintenance Costs

DeepSeek’s infrastructure comes with substantial operating costs. GPU clusters with more than 10,000 A/H100s use electricity worth over $10M yearly. H100 GPUs alone cost nearly $1B, with each unit priced at $30,000.

Support costs include:

- Technical team expenses with 139 specialized personnel

- Annual operating costs between $500M to $1B

- Regular infrastructure updates and optimization

The total ownership cost goes beyond the original setup. Companies need resources for regular upkeep, security patches, and tech support. These expenses help keep systems reliable and running at their best.

Infrastructure setup needs careful planning for both short and long-term money commitments. Companies can also look at mixed deployment options that combine cloud and on-premises solutions. This helps balance costs while keeping performance high.

Long-Term ROI Comparison

AI platforms show clear patterns in cost efficiency and value generation when we look at their long-term investment returns. The largest longitudinal study by IBM shows enterprise-wide AI initiatives yield an average ROI of 5.9%, which falls below the typical 10% cost of capital.

Monthly vs Annual Pricing

Claude’s pricing structure comes with multiple tiers: Pro at $20 monthly, Team at $30 monthly or $25 with annual billing, and Enterprise starting at $60 per seat with a minimum of 70 users. DeepSeek takes a different approach with a usage-based model at lower rates, charging $0.14 per million input tokens and $0.28 per million output tokens.

The pricing comparison shows big differences:

| Plan Type | DeepSeek Cost | Claude 3 Cost |

| Input Processing | $0.14/M tokens | $3.00/M tokens |

| Output Generation | $0.28/M tokens | $15.00/M tokens |

| Enterprise Scale | Custom pricing | $50,000 minimum |

Scaling Costs Analysis: DeepSeek vs Claude 3

Scaling AI operations needs huge infrastructure investments. Large GPU clusters need annual electricity costs that exceed $10 million. Companies report an average 89% rise in computing costs between 2023 and 2025.

The scaling equation gets more complex as organizations grow their AI operations. Businesses face several cost factors during implementation:

- Infrastructure maintenance ranges from $500 million to $1 billion annually

- Capital expenditure on H100 GPUs approaches $1 billion at $30,000 per unit

- Operational expenses grow with model complexity and usage volume

DeepSeek vs Claude 3: Future Pricing Predictions

AI pricing dynamics will see major changes according to industry analysts. Gartner predicts that by 2028, more than 50% of enterprises building large AI models from scratch will give up due to costs and complexity.

The future cost trajectory points to several key trends:

- Computing costs will rise by 89% between 2023-2025

- Infrastructure expenses will grow with model complexity

- Operational costs should decrease through optimization techniques

Companies need to think over both immediate and long-term financial implications when implementing AI solutions. The core team reports that 70% of executives see generative AI as a key driver of cost increases. A positive ROI needs careful planning of scaling patterns and infrastructure requirements.

AI deployment’s economics keep changing as companies find creative ways to manage costs. Businesses using DeepSeek get comparable results at about 42.9 times lower cost than traditional solutions. This cost advantage becomes crucial as organizations scale their AI operations.

Conclusion

DeepSeek emerges as the clear financial winner against Claude 3. The platform costs 42.9 times less while delivering competitive performance metrics. Both platforms need heavy infrastructure investments, but DeepSeek’s efficient architecture uses only 37 billion activated parameters per token that leads to major operational savings.

The numbers tell a compelling story. DeepSeek charges $0.14 per million input tokens compared to Claude 3’s $3.00, which creates a game-changing cost difference for businesses. On top of that, DeepSeek’s accuracy rate of 88.5% on the English MMLU measure outperforms Claude 3 Sonnet’s 77.1%. This proves that lower costs don’t mean compromising on quality.

Organizations should carefully evaluate their AI investment choices. The original setup costs remain substantial for both platforms. However, DeepSeek showed it can deliver similar results at a fraction of the price, making it an attractive choice for cost-conscious enterprises. The platform’s open-source nature under the MIT License gives businesses great flexibility to customize and scale their operations.