DeepSeek AI has made a remarkable entrance into the AI landscape, offering performance on par with OpenAI at a fraction of the cost. The company spent just $6 million to train its model, compared to the reported $100 million investment for GPT-4. Following its January 2025 release, DeepSeek quickly became the most-downloaded free app on Apple’s US App Store. This impressive debut highlights DeepSeek introduction as a game-changer in the AI space.

The model’s capabilities are evident through its standard scores, achieving a 79.8% Pass@1 on AIME 2024 and 7.3% on MATH-500—matching or even surpassing OpenAI’s results.

These numbers tell only part of the story. DeepSeek’s chain-of-thought reasoning approach helps users solve complex mathematics and coding challenges accurately. This piece explains everything you need to start using this powerful and available AI tool.

What is DeepSeek AI: A Simple Explanation

DeepSeek, a 1-year old AI company from Hangzhou, China, brings a fresh take on artificial intelligence technology.

Understanding AI Language Models: A DeepSeek Introduction

DeepSeek uses a smart Mixture of Experts (MoE) architecture that picks only the neural networks it needs for specific tasks. The model has 671 billion total parameters but runs quickly by using just 37 billion parameters for each task. This smart selection helps cut down computing costs and makes each task more precise.

DeepSeek’s Core Features: Exploring the DeepSeek Introduction

The model’s design packs some trailblazing solutions that make it unique in the digital world. DeepSeek’s heart lies in its Multi-Head Latent Attention, which makes attention mechanisms faster and saves memory during inference. The system shines at:

- Math problems with 90.2% accuracy on MATH-500 evaluations

- Writing code that passes 82.6% of HumanEval-Multiple tests

- Complex reasoning with 86.1% on IF-Eval Prompt Strict assessments

How DeepSeek Is Different from Other AI Models

DeepSeek stands out with its clever approach to efficiency and accessibility. The original training cost was just USD 6 million in computing power – about one-tenth of what others spent. The model charges USD 0.55 per million input tokens, which makes it a budget-friendly choice.

The MIT License makes DeepSeek open-source, so developers and researchers can freely explore, change, and use it within technical limits. The model also shapes its responses to line up with AI safety, privacy, and ethical guidelines.

DeepSeek handles long contexts well and can work with up to 128K tokens. It really shines when tackling reasoning-heavy tasks that have clear solutions. The model explains its thinking through chain-of-thought reasoning, which sets it apart from regular language models and makes it perfect for education and research.

DeepSeek Introduction: Getting Started with DeepSeek

DeepSeek setup demands careful attention to system specifications and installation steps. Users should check if their system meets these simple requirements before they start:

- Minimum 8GB RAM (16GB recommended)

- Multi-core processor (Quad-core or higher)

- High-performance GPU with CUDA support

- 50GB free storage space on SSD

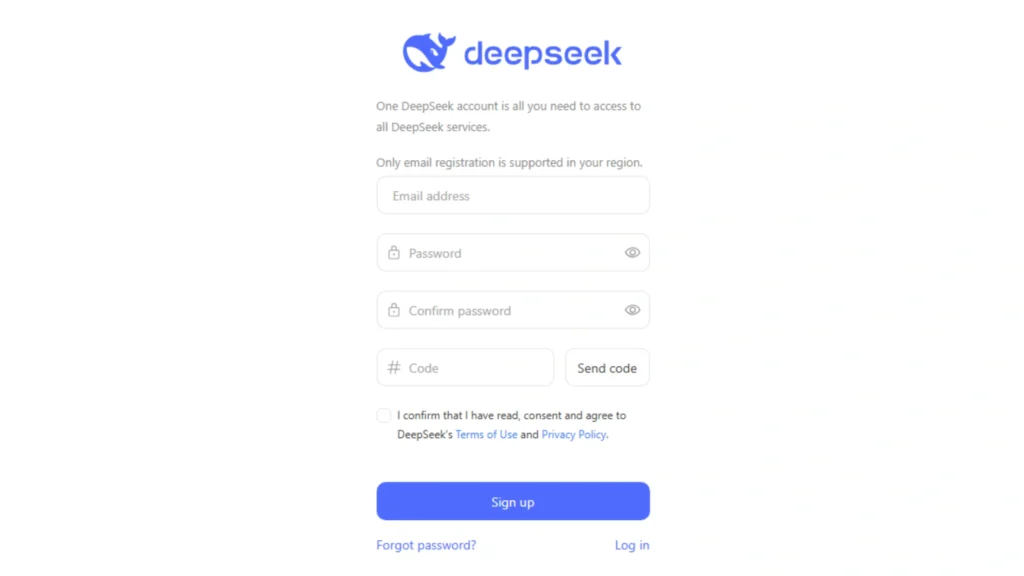

Setting Up Your DeepSeek Account

DeepSeek’s official platform is where registration begins. Users should look for the “Sign Up” button at the top-right corner of the platform’s homepage. The next step requires simple information like name, email address, and a strong password. A verification email arrives after submitting these details to complete the account activation.

Navigating the Interface

DeepSeek’s interface comes with a user-friendly dashboard that makes everything available. The platform has several key sections:

- A central chat window to interact with AI

- File attachment options to analyze documents

- Toggle options for different AI models

- Search functionality to make internet-based queries

Users can access the platform through browsers and mobile apps, which gives them flexibility in AI assistant interactions. The settings section lets users create tailored experiences through interface adjustments.

Basic Commands and Prompts in DeepSeek Introduction

Clear command structure leads to optimal results when working with DeepSeek. Specific, well-laid-out queries work better than vague questions. To cite an instance, users should add precise parameters and clear objectives to their prompts instead of asking broad questions.

Users can interact with the system in various ways:

- Direct text-based queries

- Document analysis through file uploads

- Internet-enabled searches for up-to-the-minute information

Developers can use API compatibility with ChatGPT through a simple pip3 install openai command. The platform has specialized prompts for different use cases like coding tasks or mathematical problems.

Response optimization requires users to:

- Add specific parameters to questions

- Use relevant keywords to get targeted results

- Split complex queries into smaller, manageable parts

- Add context to get accurate responses

DeepSeek Introduction: DeepSeek Models Comparison

DeepSeek’s model lineup shows impressive progress in AI development. The company’s approach combines sophisticated architecture with affordable training methods that challenge industry leaders.

DeepSeek 67B vs Other Versions

DeepSeek’s family includes several models optimized for specific tasks. Their flagship DeepSeek-R1, with 671 billion parameters, uses an innovative Mixture-of-Experts architecture that activates only 37 billion parameters during tasks. This selective activation system represents a substantial leap from their earlier 67B base model.

Here’s how the model lineup has grown:

- DeepSeek LLM (67B): The foundation model trained on 2 trillion tokens

- DeepSeek-V2: Focused on performance optimization and reduced training costs

- DeepSeek-V3: Advanced 671B parameter model with boosted efficiency

- DeepSeek-R1: Latest reasoning-focused model built on V3’s architecture

Choosing the Right Model

Your choice of DeepSeek model depends on computational needs and specific use cases. DeepSeek-V3 stands out with its efficiency, as it costs about one-tenth to train compared to similar models. Their API pricing reflects this efficiency. DeepSeek-R1 charges USD 0.55 per million input tokens and USD 2.19 per million output tokens, which beats competitors who charge USD 15.00 and USD 60.00 respectively.

Companies that need local deployment can use DeepSeek’s distilled versions from 1.5 billion to 70 billion parameters. These smaller models deliver impressive performance on standard hardware.

Performance Benchmarks: Insights from DeepSeek Introduction

DeepSeek models shine in various benchmarks. DeepSeek-R1 hits impressive scores with 79.8% on AIME 2024 and 97.3% on MATH-500, beating OpenAI’s o1-1217 which scored 79.2% and 96.4% respectively.

The model excels at coding with a 73.78% pass rate on HumanEval. It also performs well in mathematical reasoning, scoring 84.1% on GSM8K without fine-tuning. DeepSeek-R1 stays competitive in general knowledge benchmarks with 90.8% on MMLU, just behind OpenAI’s 91.8%.

DeepSeek-V3’s advanced architecture proves its worth through exceptional efficiency. It matches Claude 3.5 Sonnet’s performance while using fewer computational resources. This balance of performance and efficiency makes DeepSeek a compelling choice for organizations looking for affordable AI solutions without sacrificing capabilities.

Real-World Testing Results

Testing across multiple domains shows DeepSeek’s capabilities in real-life scenarios. Research teams and developers have done thorough testing to assess how the platform works in practice.

Testing Methodology

Research teams used a systematic approach to assess DeepSeek’s capabilities. Scientists at Ohio State University tested both DeepSeek-R1 and OpenAI’s models. They used 20 tasks from ScienceAgentBench that focused on data analysis, visualization, and ground applications. Oxford University’s mathematicians also tested how well the model creates proofs in functional analysis.

The testing process focused on three key areas:

- Scientific data analysis and visualization

- Mathematical reasoning and proof generation

- Code generation and debugging capabilities

DeepSeek Introduction: Performance Metrics

DeepSeek-R1 showed remarkable results in ground applications. The model needed more processing time but ran at costs about 13 times lower than other platforms. Tests that cost £300 (USD 370.00) with other models were done for less than USD 10.00 with DeepSeek.

The model stood out in coding challenges. DeepSeek-V3 completed three out of four complex coding tasks successfully. All the same, both V3 and R1 models don’t deal very well with specialized scripting environments.

User Experience Findings

Ground testing revealed several practical issues. Users found that DeepSeek’s interface needed specific email setups, which mostly limited access to public cloud email addresses. The platform sometimes had response issues, so tasks took longer to complete.

The model took a unique approach to solve math problems. It worked step by step through tough problems, going back when needed and trying different methods until it found the right answer. This systematic way of solving problems worked well for tasks that needed long chains of reasoning.

The platform makes great use of resources in practice. DeepSeek breaks queries into smaller, manageable tasks through test-time compute and inference-time scaling. This approach helps handle complex problems while keeping computational costs reasonable.

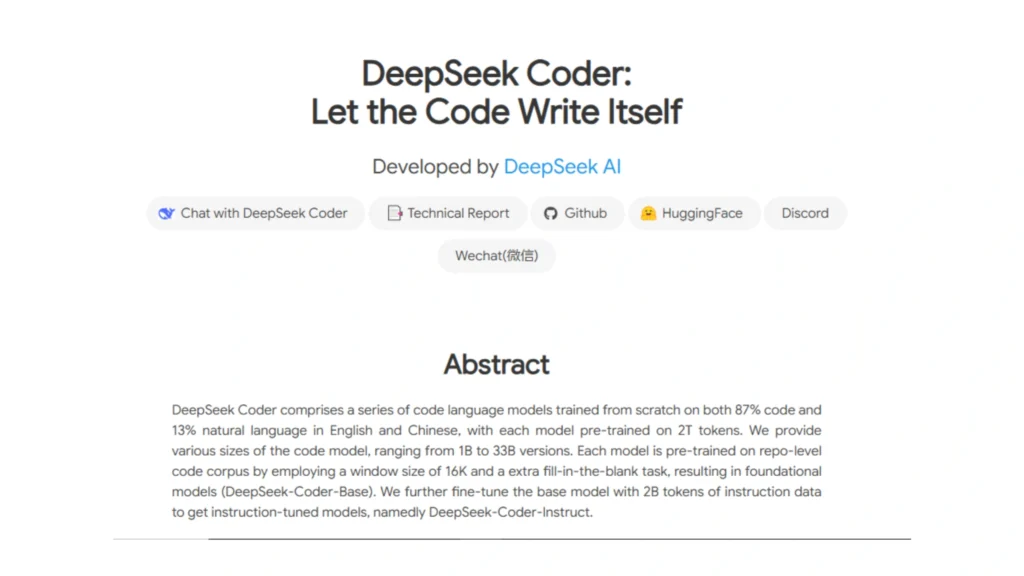

DeepSeek Coder Deep Dive: A Closer Look at DeepSeek Introduction

DeepSeek Coder is a trailblazing open-source code language model released in November 2023. The model was trained on 2 trillion tokens and shows remarkable abilities in code generation and comprehension.

Code Generation Capabilities

DeepSeek Coder shows excellence in multiple coding tasks through its sophisticated architecture. The model delivers state-of-the-art results among open-source models in code completion. We trained it on a dataset that has 87% code and 13% natural language content. The system provides reliable support to complete project-level code and infilling tasks.

The model does more than simple code generation. It handles larger codebases with its 16,000-token context window, which enables detailed project-level assistance. DeepSeek-Coder-V2, the latest version, takes this further with a 128,000-token context window. This enhancement supports more extensive code analysis and generation tasks.

DeepSeek Introduction: Language Support

DeepSeek Coder supports an impressive range of programming languages. The original version worked with over 80 programming languages. The latest V2 release now covers 338 programming languages. Developers of all tech stacks can use the model effectively.

The model’s training data has both English and Chinese natural language content. This makes it valuable for:

- Professional developers seeking code generation and completion

- Educational institutions requiring clear code explanations

- Research teams working on multilingual development projects

Best Practices for Coding Tasks

Developers should be structured to get the best results from DeepSeek Coder. The model shows exceptional performance in HumanEval coding tests with a 73.78% pass rate. This proves its reliability for practical coding tasks.

Developers should use its fill-in-the-middle code completion feature. This feature enables contextual code generation where the model understands and completes code segments based on surrounding context. The model also excels at debugging tasks and offers explanations and suggestions to optimize existing code.

Performance metrics highlight its practical value. DeepSeek-Coder-Base-33B performs better than existing open-source code LLMs while staying efficient across different configurations. Teams can choose from multiple versions ranging from 1.3B to 33B parameters based on their computational resources and project needs.

DeepSeek Math Capabilities

Mathematical excellence is the life-blood of DeepSeek’s capabilities. The platform shows exceptional performance in computational challenges of all types. Rigorous testing through prestigious competitions and standard benchmarks has proven the model’s mathematical abilities.

Mathematical Problem Solving with DeepSeek Introduction

DeepSeek R1 shines in competitive assessments of mathematical skill. The model scored an impressive 79.8% pass@1 score on the American Invitational Mathematics Examination (AIME) 2024. This achievement places it among top-tier AI systems. The model also secured a remarkable 97.3% pass@1 on the MATH-500 dataset.

The platform’s mathematical abilities cover:

- Advanced mathematical reasoning and proofs

- Complex problem-solving in geometry and algebra

- High-level mathematical competition problems

- Up-to-the-minute mathematical decision-making

DeepSeek Introduction: Formula Recognition

DeepSeek-VL shows sophisticated formula recognition capabilities through its multimodal understanding system. We used a hybrid vision encoder that supports 1024 x 1024 image input to process mathematical formulas with high precision. This visual processing helps the system handle complex mathematical notations and diagrams effectively.

The model’s formula recognition system builds on the largest longitudinal study of vision-language tokens, with approximately 400B tokens. This complete training leads to accurate interpretation of:

- Mathematical equations and expressions

- Scientific notations and symbols

- Complex mathematical diagrams

- Technical mathematical literature

Step-by-Step Solutions

DeepSeek stands out with its complete step-by-step approach to mathematical problem-solving. The model uses multiple solution methods to verify answers. Current demonstrations include techniques like the Pythagorean theorem and Heron’s formula. This systematic approach will give a precise solution while teaching valuable lessons.

The platform’s mathematical reasoning comes from specialized training on math-related tokens from Common Crawl. DeepSeekMath started with DeepSeek-Coder-v1.5 7B and went through additional pre-training on 500B tokens. The result? Mathematical reasoning abilities that beat existing open-source base models by more than 10% in absolute terms.

Chain-of-thought prompting helps the model explain mathematical concepts and problem-solving strategies step by step. This approach works especially well in educational settings. The system breaks down complex problems into manageable steps without losing accuracy or clarity in its explanations.

Repeated testing shows DeepSeek’s mathematical capabilities match industry leaders. Mathematicians at prestigious institutions praise the platform’s ability to create proofs in abstract fields like functional analysis. But users need existing mathematical knowledge to assess the quality of proofs and solutions effectively.

Practical Applications: Exploring the DeepSeek Introduction

DeepSeek shows remarkable versatility and budget-friendly solutions in a variety of industries. The platform processes complex tasks at a fraction of traditional costs, creating new possibilities for businesses and researchers.

Content Creation

DeepSeek’s content generation capabilities go way beyond simple text creation. The platform creates unique content ideas for bloggers, content creators, and social media managers. Its sophisticated language processing helps with writing, editing, and keyword optimization tasks that transform content teams’ operations.

The platform’s content creation strengths include:

- Writing assistance for blog posts and articles

- Social media caption generation

- Video script development

- Email newsletter composition

- Keyword-optimized content production

DeepSeek’s approach to content creation is different from traditional AI tools because of its advanced reasoning capabilities. The system analyzes context, meaning, and intent to deliver precise and tailored results.

Data Analysis

DeepSeek introduces state-of-the-art capabilities with budget-friendly processing in data analysis. Users can access live insights as the platform processes data instantly. The platform analyzes complex financial models faster and more accurately than conventional tools.

The system’s data analysis capabilities excel in several key areas:

- Live data processing and visualization

- Complex financial modeling and simulation

- Market trend analysis and prediction

- Consumer behavior pattern recognition

Companies see tangible benefits from these capabilities. CFOs upload financial data and get instant simulations of cost-cutting strategies or investment opportunities. Small logistics companies in emerging markets can optimize their supply chains with insights that were previously available only to well-funded tech giants.

Research Assistance

Academic institutions worldwide have noticed DeepSeek’s research capabilities. The platform performs as well as industry-leading models in evidence-based scientific tasks, including bioinformatics, computational chemistry, and cognitive neuroscience. DeepSeek changes researchers’ approach to their work, whether they analyze patient medical data or speed up literature reviews.

The platform’s research applications show exceptional strength in:

- Scientific data analysis and visualization

- Literature review automation

- Hypothesis development support

- Complex problem-solving in specialized fields

Note that DeepSeek’s budget-friendly approach makes it valuable for research institutions. Tasks that cost £300 (USD 370.00) with other models now cost less than USD 10.00. The platform maintains competitive accuracy levels and matches or exceeds proprietary alternatives’ performance in scientific measures.

DeepSeek’s effect on healthcare research stands out. Rural hospitals can provide early diagnoses and tailored treatment plans without expensive infrastructure. The platform analyzes large datasets and extracts meaningful insights, making it invaluable for medical research and patient care optimization.

DeepSeek learns and improves based on user queries and interactions to ensure research integrity. Its sophisticated data integration features and adaptive capability make it a powerful ally in advancing scientific discovery and state-of-the-art solutions.

Tips and Best Practices

You need to know DeepSeek’s ins and outs and use proven strategies to get the best results. Our tests and research have revealed several best practices that will help you get the most out of the platform while avoiding common mistakes.

Optimizing Prompts

The way you engineer prompts is vital for getting good results with DeepSeek. The model’s confidence and performance depend a lot on how well you prepare your training data. Here’s how to make your prompts more effective:

- Use clean, structured datasets (CSV, Excel)

- Remove missing values and outliers

- Apply proper data preprocessing

- Keep datasets balanced across categories

Clear and specific prompts work better than long, vague ones. The model gives better answers to specific requests like “Calculate the average sales for Q1 2024” rather than general ones like “Analyze this”. Breaking down complex tasks into smaller steps will help you get more accurate responses.

Avoiding Common Mistakes

Technical issues can affect how well DeepSeek works. Poor internet connections often lead to worse results, so you need to keep an eye on your connection quality. Old software versions might miss important fixes that could affect the model’s confidence.

Many users assume the model always keeps track of context. Tests show that DeepSeek R1 needs explicit context checks, especially for complex coding tasks. The model thinks well but works best with structured guidance instead of open questions.

Performance Optimization

Fine-tuning is the life-blood of making DeepSeek work better. Here are the main steps:

- Selecting an appropriate pre-trained model

- Collecting task-specific data

- Applying training frameworks

- Watching for potential overfitting

- Evaluating using validation sets

Adjusting hyperparameters makes a big difference in how well the model performs. You need to identify key settings like learning rate and batch size, then find the right ranges for each. Keep track of validation loss and accuracy to make sure fine-tuning works well.

The way DeepSeek models are built affects how they learn and make decisions. Testing different model structures can help them work better with new situations. Regular updates with fresh data help keep the model relevant and effective as things change.

DeepSeek handles complex queries efficiently thanks to its unique approach to test-time compute and inference-time scaling. This helps a lot when you’re working on tasks that need lots of computing power but want to keep costs reasonable.

Confidence calibration helps ensure the model performs reliably. These adjustments make output probabilities match real performance metrics. Good calibration means the model’s confidence levels actually show how well it can handle different tasks.

The platform works well for coding tasks too. Clear prompts with specific context and requirements help DeepSeek write better code. This method works better in real coding situations, where clear instructions lead to more accurate code.

Scientists find that preparing data properly and picking the right model makes DeepSeek work best. Research teams have saved money while keeping their results accurate. Smart optimization means tasks that used to cost hundreds of dollars now cost much less but still give great results.

Conclusion

DeepSeek matches the performance of leading AI platforms but costs much less to run. Its innovative Mixture of Experts architecture activates only specific parameters, which produces optimal results in a variety of applications – from complex mathematical proofs to advanced code generation.

Ground testing shows how practical DeepSeek really is. Tasks that used to cost hundreds of dollars now run for under $10, and the accuracy stays just as high. These affordable costs, plus the model’s impressive scores on AIME 2024 and MATH-500, make DeepSeek a strong alternative to other 2-year-old AI platforms.

DeepSeek’s sophisticated chain-of-thought reasoning makes it unique, especially for complex math and coding challenges. While it sometimes takes longer to process than other platforms, it makes up for this with better cost efficiency and detailed step-by-step solutions. This methodical approach works great for education and research, where understanding the process matters as much as getting the right answer.

Of course, DeepSeek represents a big leap forward in making advanced AI available to everyone. The platform matches premium services at a fraction of the cost, which creates new opportunities for businesses, researchers, and developers worldwide.